On May 17 at Reading University, Gemma Hodgson from Qi Statistics ran an informative discussion workshop for the IFST SSG group on moving forwards with inferential statistics. The current p-value approach focuses on the chance of seeing data as extreme as generated in an experiment if there is really no difference in treatments, and often is based on a standard (but arbitrary?) cut-off of 5% significance (p<0.05).

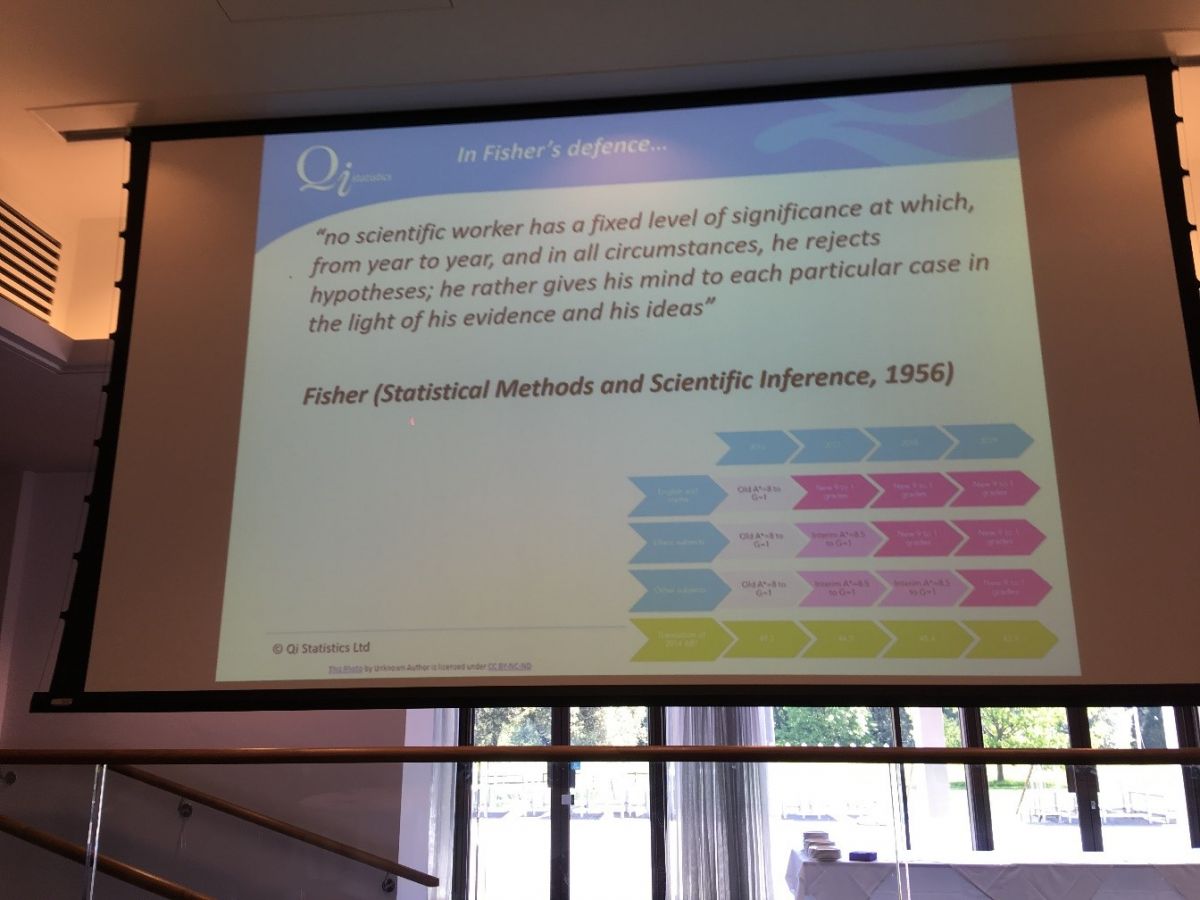

Gemma took us through a quick history of significance testing (including a famous tea party in Cambridge resulting in Fisher’s exact test), leading into current discussions about the best way to present results and make conclusions. She explained how these topics are coming up in a range of disciplines and  causing a stir in the statistical world, and funnily, that these sorts of debates are not really that new…

causing a stir in the statistical world, and funnily, that these sorts of debates are not really that new…

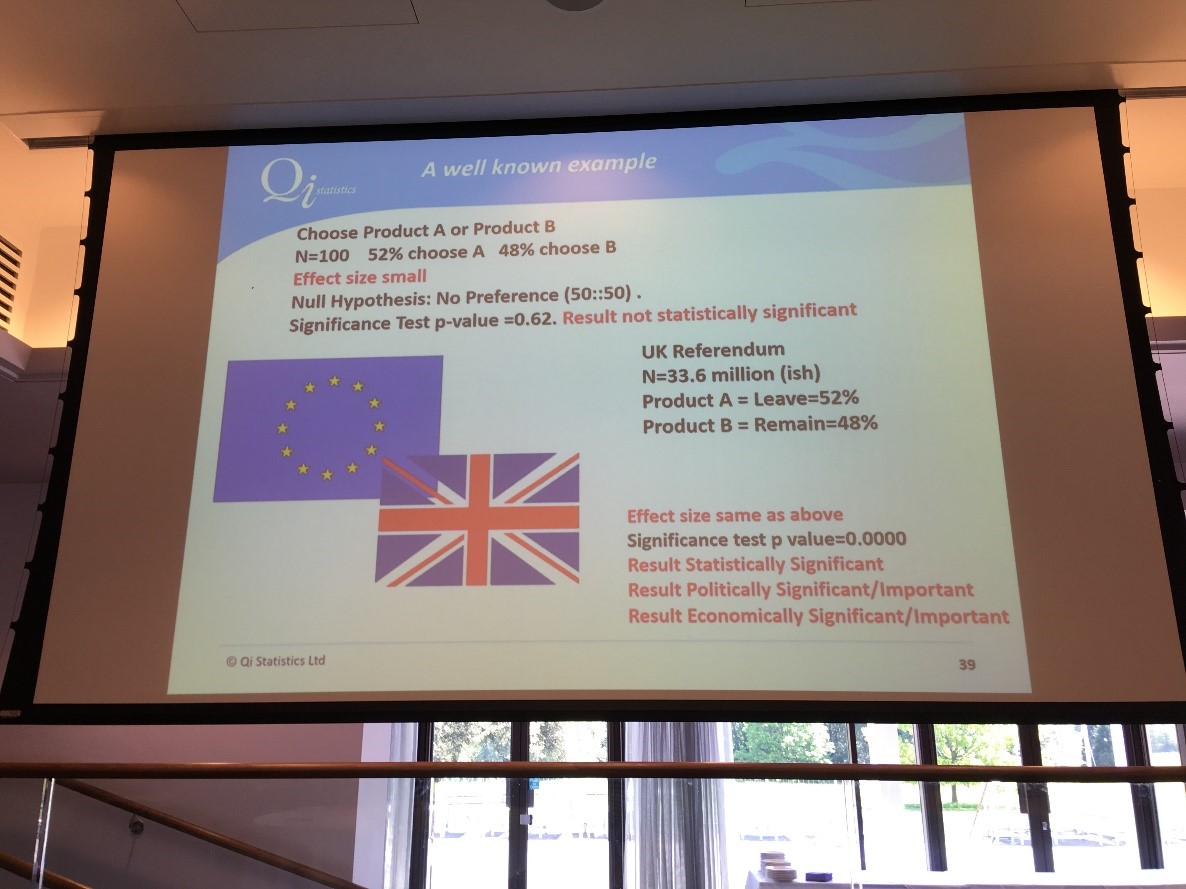

In summary, Gemma explained how over reliance on p-value can be misleading and/or lead to irrational decision making. We need to also consider effect size, context and prior information. We should be asking questions before starting an experiment such as:

What sort of results might we expect to see and why?

What size of effect is important?

How much evidence/confidence is needed to confirm we are seeing an effect?

We were treated to some highly relevant and topical examples …

Moving forwards, if we consider what effect size is important and run preliminary experiments to estimate variability, decisions can be made about sample size that ensure the design of the experiment is robust. Setting up studies to correlate consumer behaviour or preference to scale effects would help guide the efficient design and use of data from internal sensory studies. In terms of reporting, how can we move on from p-values? Presenting confidence intervals and highlighting best and worst-case scenarios (put more emphasis on size/importance of effect and precision of the data), and very importantly, discussing if results are within expected trends given the science/background to the project, can both help.

Moving forwards, if we consider what effect size is important and run preliminary experiments to estimate variability, decisions can be made about sample size that ensure the design of the experiment is robust. Setting up studies to correlate consumer behaviour or preference to scale effects would help guide the efficient design and use of data from internal sensory studies. In terms of reporting, how can we move on from p-values? Presenting confidence intervals and highlighting best and worst-case scenarios (put more emphasis on size/importance of effect and precision of the data), and very importantly, discussing if results are within expected trends given the science/background to the project, can both help.

And possibly we can start to consider Bayesian approaches which use past knowledge in a formal way to create an a-priori distribution. Then data generated in current experiments is formally combined to create a new updated distribution from which we can define 'credible' intervals – a more intuitive interval than the frequentist 95% confidence interval. All the data is used in Bayesian statistical models, which have become more possible and popular recently because of increases in computer power, more accessible software and the acknowledgement of the role that context and complexity play.

Although those attending the discussion forum thought time constraints and the need to work to clear action standards could pose some resistance to moving on from a reliance on p-value in their organisations, Gemma suggested taking a softly, softly approach; and to start to introduce data in different ways and raise key questions when research projects are commissioned, whilst also delivering the p-values the clients expect to see. Good advice and a stimulating session – thank you Gemma!

Carol Raithatha, Carol Raithatha Limited